The widely cited $5.5 million figure for training costs actually refers to DeepSeek V3, not R1, and even then, it’s just a fraction of V3’s actual training costs. As stated in the V3 paper: “The above cost only includes the formal training of DeepSeek-V3 and does not account for the preliminary research, ablation experiments, or costs associated with architecture, algorithms, and data.”

The compute power required for pioneering exploration and latecomer catch-up is inherently on completely different scales. For the same generation of models, the compute required to train them tends to drop exponentially every N months due to:

Algorithmic advances (e.g., FP8, hybrid MoE),

The continual deflation of compute costs, and

Efficiency in replication methods, such as distillation, which condenses data. Exploration inherently involves waste, while latecomers “standing on the shoulders of giants” can avoid this. For instance, the training cost of OpenAI’s o1 must have far exceeded GPT-4, and similarly, the cost of R1 training is definitely higher than V3. Moving forward from o3 to o4/o5 or R1 to R2/R3, training compute will only increase.

Lowering per-training costs doesn’t mean total training costs will go down. When training efficiency improves, do labs reduce their investments? No. The real logic is this: with higher efficiency, they push compute usage to its limits to extract greater returns. Take DeepSeek as an example—despite having strong infra cost optimization, stockpiling GPUs early, and not expanding much into API services, they’re still short on GPUs. Comparing horizontally, some U.S. labs that have spent far more money do look a bit awkward. But will they then focus on cost efficiency? Unlikely. Instead, they’ll absorb and leverage methods open-sourced by DeepSeek + deploy their much larger compute resources = achieve even bigger breakthroughs. The biggest concern about compute is hitting the data wall—better efficiency could actually push the model’s potential ceiling even higher.

DeepSeek represents a victory for open-source over closed-source. The contributions to the community will rapidly translate into the prosperity of the open-source ecosystem as a whole. If there’s any real “loser” here, it’s likely the closed-source models. China already experienced this—the fear of being dominated by Llama. Chinese closed-source model companies that couldn’t keep up with Llama 3 were forced to shut down, shift to applications, or go open-source. Today, Chinese open-source efforts are challenging North American closed-source models. If a company can’t even match R1 (let alone the upcoming R2 and R3), its API value will basically drop to zero. That said, this process will undoubtedly shrink the number of model training participants quickly.

Most importantly, everything discussed above is about training, while future demand will clearly be driven by inference. One point that’s been overlooked is that DeepSeek’s impact on inference costs is even more astonishing than on training. For example, AMD just announced support for DeepSeek V3. The elegance of the DeepSeek architecture lies in the fact that, compared to standard transformer architectures, it doesn’t rely on custom operators. Theoretically, it can be more easily supported on various GPU types—this flexibility was essentially forced by GPU export control. DeepSeek-V3 will support private deployment and fine-tuning, offering downstream applications much greater potential than what was possible in the closed-source model era. In the next one to two years, we’re likely to witness richer inference chip products and a more vibrant LLM application ecosystem.

How can the U.S. balance its still-massive infrastructure investments with the waste from past spending? U.S. CSPs (cloud service providers) are still scrambling for electricity allocations, already locking in capacity through 2030. Over the past two years, CSPs have poured hundreds of billions of dollars into infrastructure, and none of them did this solely for training purposes. Most investments were driven by their internal business needs and the growth of inference services:

Microsoft prepped compute credits for OpenAI,

AWS rents compute to downstream clients for training,

Meta/xAI uses some compute for their own training. But the bulk of this compute was built for their recommendation systems or autonomous driving businesses. Microsoft has even shifted focus away from Sam Altman’s all-in push for training, opting instead to prioritize more reliable returns from inference (as Satya Nadella has publicly stated).

Objectively speaking, DeepSeek has exposed some wasted training investments by North American CSPs in the past—it’s the necessary cost of exploring new markets. But looking ahead, the overall prosperity of open-source will ultimately benefit these “middlemen.” CSPs aren’t the miners taking the risks—they’re the ones selling the shovels. They build more valuable application ecosystems on top of these models, whether open or closed. GPUs won’t be used solely for training; an increasing percentage will be allocated to inference. If more efficient training accelerates model advancement and enriches the application ecosystem, how could they not continue investing?

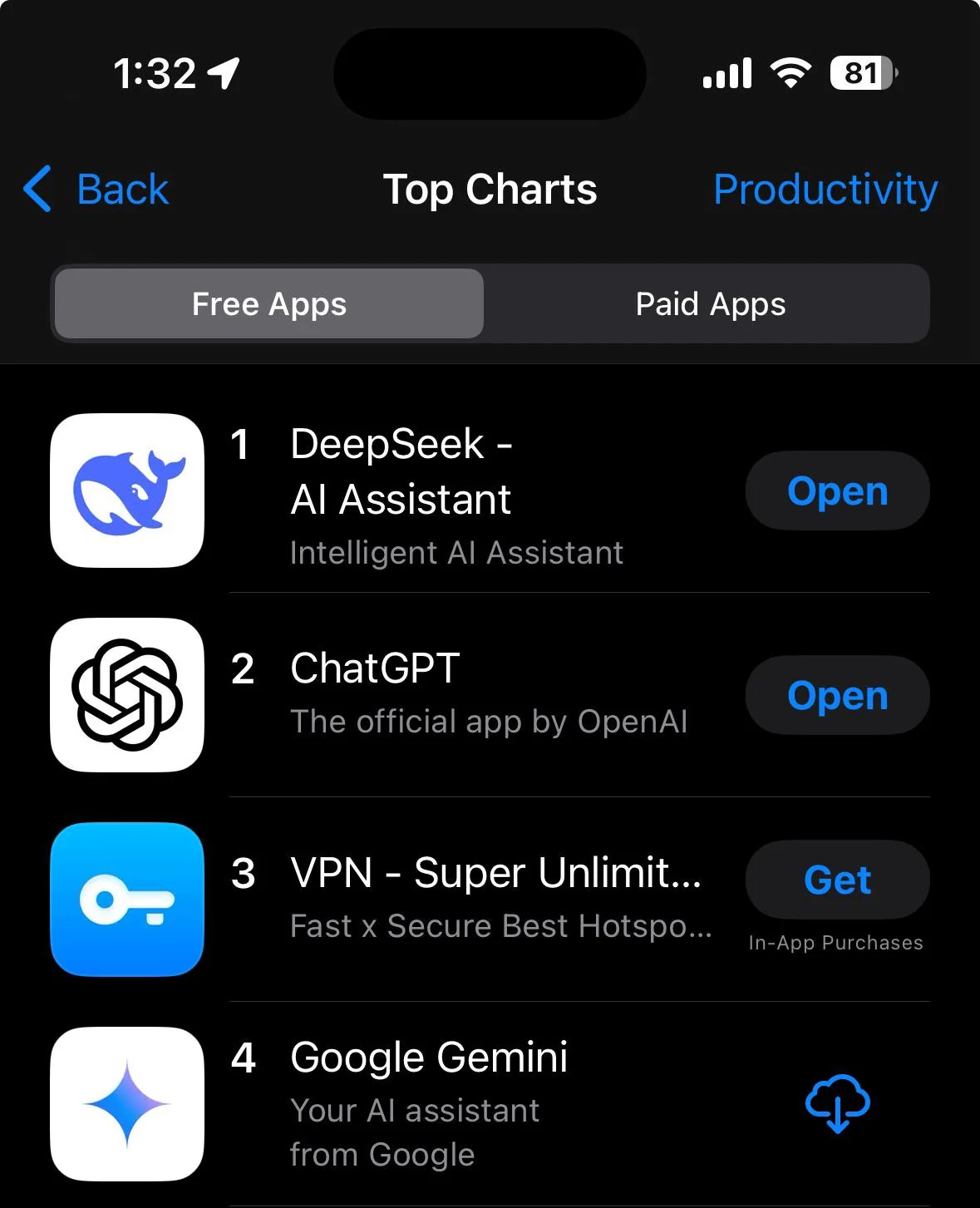

DeepSeek may not make compute power or NVIDIA obsolete, but it’s certainly making closed-source AI models anxious. Meanwhile, the majority of global AI practitioners are excited. DeepSeek has slashed the cost of state-of-the-art closed-source inference models to less than one-tenth, making them accessible to everyone. On X (formerly Twitter), the reaction has been overwhelmingly positive, with many AI developers already using distilled R1 models to achieve inference on par with o1 at near-zero cost.

Using fewer GPUs to train a model that performs similarly is not just about saving costs—it’s an improvement of scaling laws. This suggests that scaling up more GPUs with these methods could elevate model capabilities to an entirely new level, bringing AGI even closer to reality. DeepSeek has pushed the entire open-source AI ecosystem forward, accelerating progress for the entire industry.

Source: https://mp.weixin.qq.com/s/LOep19BeVH4QO5MLOT4OD

Discussion about this post

No posts

Really good.....thanks