DeepSeek Launches Next-Generation Model on Par with OpenAI-o1 Official Version

One more progress

DeepSeek just dropped its latest model, DeepSeek-R1. Performance-wise, it matches OpenAI-o1’s official release, and here’s the best part—it’s fully open-source under the MIT License. The API is live, super easy to use, and affordable. Plus, they’ve released six distilled smaller models, with the 32B and 70B versions outperforming OpenAI o1-mini in key areas. They’re also sharing all the training techniques to encourage collaboration. You can hop on the updated website or app to test out the “Deep Thinking” mode and see it in action.

Below is the transcript of the official press release:

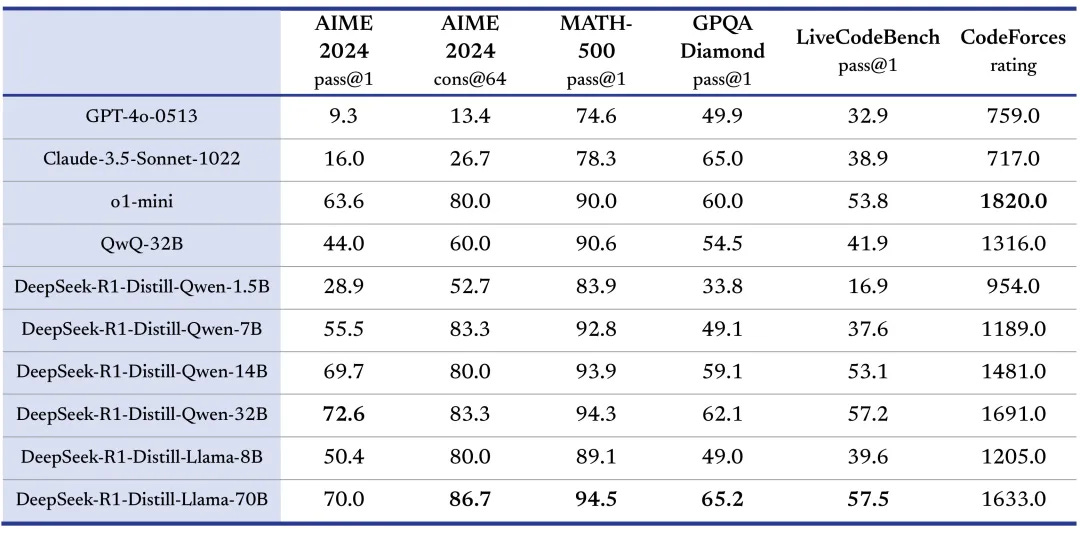

Today, we are officially releasing DeepSeek-R1 and simultaneously open-sourcing the model weights.

DeepSeek-R1 is licensed under the MIT License, allowing users to train other models using distillation techniques with R1.

DeepSeek-R1 API is now live, enabling users to access chain-of-thought outputs by setting

model='deepseek-reasoner'.The DeepSeek official website and app have been updated and are now available.

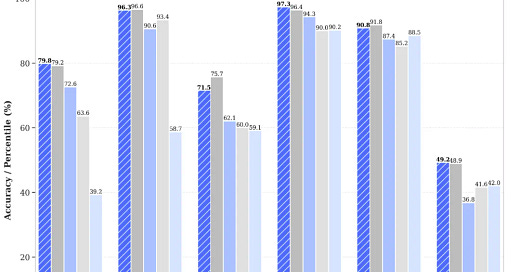

Performance aligned with OpenAI-o1 official release

DeepSeek-R1 leverages large-scale reinforcement learning techniques in its post-training phase, significantly enhancing its reasoning capabilities even with minimal labeled data. In tasks such as mathematics, coding, and natural language reasoning, its performance is on par with the official version of OpenAI-o1.

Here, we are fully disclosing the training techniques for DeepSeek-R1 in hopes of fostering open exchange and collaborative innovation within the tech community.

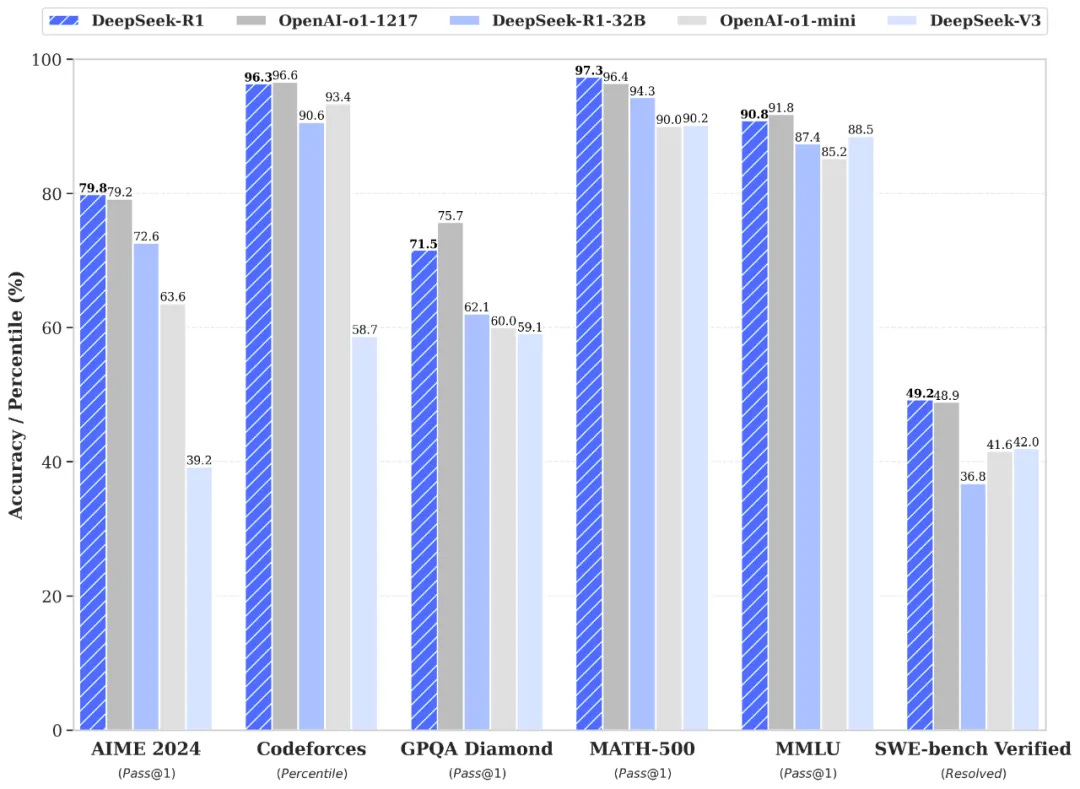

Distilled small models surpass OpenAI o1-mini

In addition to open-sourcing the 660B models DeepSeek-R1-Zero and DeepSeek-R1, we have distilled six smaller models using outputs from DeepSeek-R1. Among these, the 32B and 70B models have achieved performance comparable to OpenAI o1-mini across multiple capabilities.

Open License and User Agreement

To promote and encourage the development of the open-source community and industry ecosystem, alongside the release and open-sourcing of R1, we have made the following adjustments to licensing and authorization agreements:

Unified Open Source License: MIT

Previously, we introduced the DeepSeek License tailored for large model open-source scenarios, drawing on industry best practices. However, experience has shown that non-standard open-source licenses may increase the cognitive burden on developers. To address this, our open-source repositories, including model weights, will now uniformly adopt the standardized and permissive MIT License. This fully open-source approach imposes no restrictions on commercial use and requires no application for usage.Product Agreement Explicitly Allows "Model Distillation"

To further promote technology openness and sharing, we have decided to support users in performing "model distillation." The online product user agreement has been updated to explicitly allow users to utilize model outputs for training other models through distillation techniques.

App and Web Access

Log in to the DeepSeek official website or the official app, activate the "Deep Thinking" mode, and you can leverage the latest DeepSeek-R1 to perform various reasoning tasks.

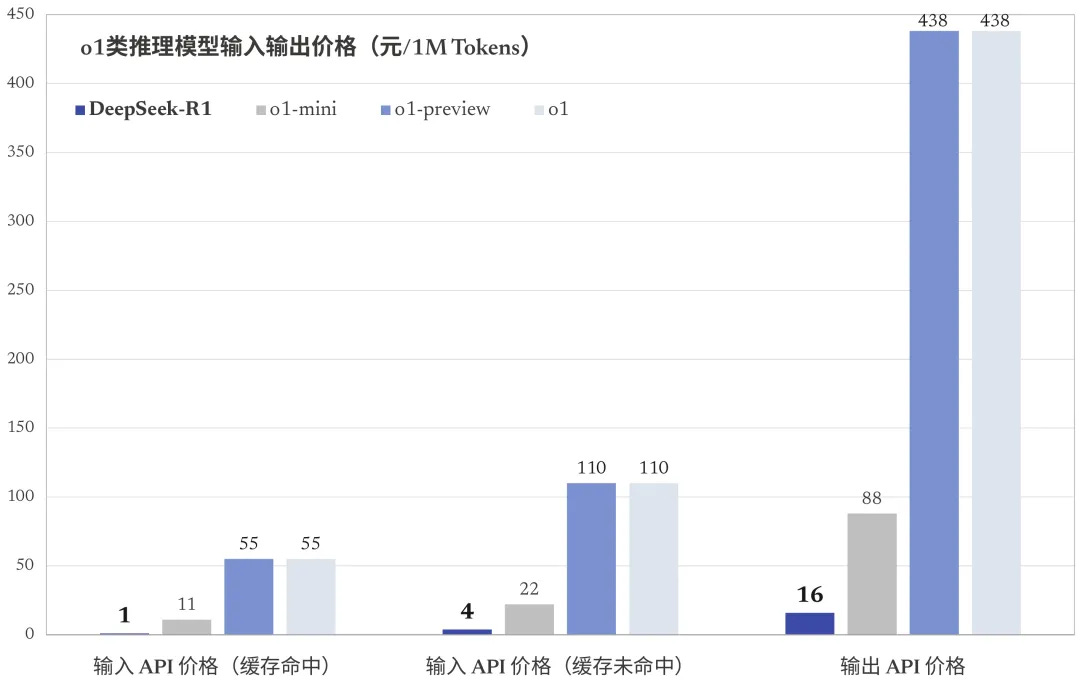

API and Pricing

The pricing for DeepSeek-R1 API services is as follows:

Input Tokens:

¥1 per million tokens (cache hit)

¥4 per million tokens (cache miss)

Output Tokens:

¥16 per million tokens

This pricing structure ensures flexibility and affordability for users leveraging the DeepSeek-R1 API for their applications.

Detailed API Usage Guide

For comprehensive instructions on how to use the DeepSeek-R1 API, please refer to the official documentation:

https://api-docs.deepseek.com/zh-cn/guides/reasoning_model

The guide provides step-by-step details on integrating and utilizing the reasoning model effectively in your applications.