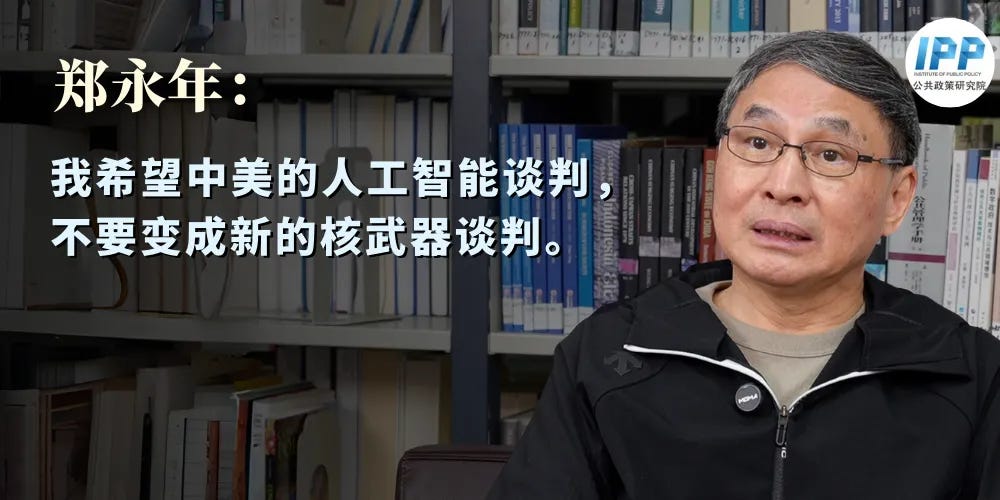

Zheng Yongnian: Hoping that the Sino-U.S. Dialogue on AI Does Not Turn into a New "Nuclear Weapons Negotiation"

In a recent interview, Professor Zheng Yongnian(郑永年), a famous Chinese political scientist and commentator, expressed his concerns and hopes regarding the ongoing and future dialogues between China and the United States in the field of AI. He draws a parallel between the current AI discussions and the Cold War-era nuclear weapons negotiations, emphasizing the need for a different approach.

Interviewer

We know that "AI governance" has become a global issue. Recently, China and France issued a joint statement specifically on artificial intelligence and global governance, mentioning that both sides will strengthen cooperation in global AI governance. Previously, U.S. Secretary of State Blinken visited China, and despite many conflicts and differing opinions between China and the U.S., both parties reached a consensus on dialogue in the field of artificial intelligence.

At the same time, the U.S. has recently been attempting to introduce legislation to restrict the export of large AI models. The issue behind the TikTok ban also involves data flow and AI technology.

On one hand, countries emphasize joint governance, but at the same time, they act independently and even regard each other as rivals. How do you view this trend?

Zheng Yongnian

Artificial intelligence has now become an unavoidable topic in dialogues between major powers. I remember Mr. Henry Kissinger once said, "The field where China and the U.S. need to engage in serious dialogue is artificial intelligence." He also suggested that AI negotiations between China and the U.S. are akin to nuclear weapons negotiations during the Cold War.

During the Cold War, both the U.S. and the Soviet Union accumulated nuclear arsenals sufficient to destroy each other. This mutual existential threat forced both parties to sit down and negotiate. Thus, nuclear weapons were not only the core of the U.S.-Soviet Cold War confrontation and game but also the window for dialogue between the two sides.

Kissinger believes that nowadays, issues like climate change, public health, and nuclear non-proliferation no longer suffice to make China and the U.S. truly sit down and negotiate. Only artificial intelligence can achieve this, because the potential threats posed by AI are devastating not only to China and the U.S. but to the entire world.

Unlike the singular and static nature of nuclear weapons, artificial intelligence is abstract and dynamic. It encompasses big data, robotics, and permeates various aspects of political, economic, and social life, forming an ecosystem that is reshaping human production and living. From this perspective, the impact of AI on global geopolitical order and human society could potentially surpass that of nuclear weapons.

Fortunately, there is already some foundation for dialogue between China and the U.S. in the field of artificial intelligence. At the end of last year, during the San Francisco meeting between Chinese and U.S. leaders, both parties agreed to establish a China-U.S. intergovernmental dialogue mechanism on AI. Subsequently, Blinken visited China, and recently, representatives from both countries held the first China-U.S. intergovernmental dialogue on AI in Geneva, Switzerland, achieving some results. Although there are clear differences influenced by factors such as the U.S.'s binary opposition thinking, the continuation of dialogue is a positive sign.

In fact, despite the intense competition in the field of artificial intelligence, the different AI development models between China and the U.S. still provide many opportunities for dialogue and complementarity. The U.S. leads in large model technology and algorithm development, while China has certain advantages in technology regulation and big data applications.

I often compare technological development to a car that needs both an "engine" and "brakes." The gears of AI technological development in the U.S. are currently running at high speed, with tech giants continuously producing new technological achievements, such as SORA, ChatGPT 4.0, etc. This is partly because they haven't applied the "brakes"—the U.S. has adopted a laissez-faire approach to artificial intelligence, with virtually no federal regulatory framework, only state-level legislation.

So it can also be said that American artificial intelligence is like a "spear," emphasizing offensiveness, while Chinese artificial intelligence is more like a "shield," emphasizing defensiveness. I believe these two approaches are not only in conflict but can actually complement each other, providing room for mutual benefit.

Currently, the development of artificial intelligence and the lack of regulation have already brought some deep-seated risks to American society. For example, AI-generated content (AIGC) has been used to create fake news and leak private data. AI has already been used for deepfakes and producing content that misleads voters.

There are reports of people in the U.S. using AI tools to generate "fake Biden" calls, urging voters not to vote in state primaries. As AI tools become increasingly affordable, and in the absence of regulation, anyone can use AI tools to disrupt American elections, which deeply concerns some American politicians.

Currently, there are voices within both U.S. political parties advocating for AI regulation, though there are differences in the extent of the regulation proposed. Some tech companies are actively lobbying against such regulations, citing "competition from China" as a significant reason.

In summary, if the U.S. continues to develop AI without regulation, it will bring negative social impacts. In contrast, China has made relatively better progress in AI regulation and rule establishment.

That's why I always say that China and the U.S. can learn from each other in the field of artificial intelligence. The U.S. especially should learn from China's regulatory measures. Unfortunately, restricting China's AI development is a priority for the U.S. If this trend continues, it could lead to a situation similar to the nuclear arms race during the Cold War. Despite this, the U.S. and the Soviet Union eventually sat down to negotiate.

However, the U.S.-Soviet nuclear weapons negotiations were a form of cautious "cold peace." Personally, I hope that AI negotiations between China and the U.S. do not become a new form of "nuclear weapons negotiations."

Artificial intelligence is different from nuclear weapons. Nuclear weapons mostly serve as a deterrent and are relatively removed from daily human life. In contrast, AI is something we interact with every day. If it evolves into a purely strategic competition tool, it would be a disaster for both countries.

Moreover, AI technology is prone to flow and spread, making it difficult to thoroughly restrict. In a globalized world, neither China nor the U.S. can completely exclude each other's AI.

Therefore, I believe there is ample room for deep dialogue between China and the U.S. on artificial intelligence. Although the U.S. now aims to achieve absolute dominance in AI, in my view, the U.S. is somewhat overestimating itself in certain aspects. China is very smart in the commercial application of AI, and we have a significant chance to surpass the U.S.

But we also need to reflect on ourselves. We are regulation-oriented, and we need to learn from the U.S. approach to promoting AI development as our next step. Since we have done well in creating "brakes," we now need to find ways to stimulate the development of the "engine."

I hope that the consensus reached by the presidents of China and the U.S. can serve as a good starting point. I hope that the AI negotiations between China and the U.S. can help both sides find more areas for mutual learning and exchange, which would be beneficial for the development of both countries and world peace.

You also mentioned the China-France joint statement on AI. Looking at the global AI development landscape, Europe represents another AI development model. Recently, the EU Council formally approved the Artificial Intelligence Act, which focuses on preventing "AI risks." The EU has implemented a strict classification system for AI based on the so-called "level of risk to society," with heavy regulations and penalties to deter AI companies.

This regulatory model has also raised many concerns. It will have a significant impact on companies operating in the EU and may hinder innovation, especially in the field of generative AI.

In fact, although Europe is leading in rule-making, it has fallen behind the U.S., and even China, in many aspects of the AI race. The EU's rule-oriented development model, with its dense and strict regulations, including those at the data level, has somewhat hindered its pace of development.

Currently, the largest companies in the AI field are all based in the U.S., and these companies have essentially monopolized the development of current technologies. European countries naturally do not want to see the U.S. achieve "hegemony" in AI, and are actively promoting investment and seeking cooperation.